Lecture 01 2022/01/18

- Why study OS

- To learn the language of systems: abstraction

- To understand issues of system design

- To under stand how computers work

- What is OS

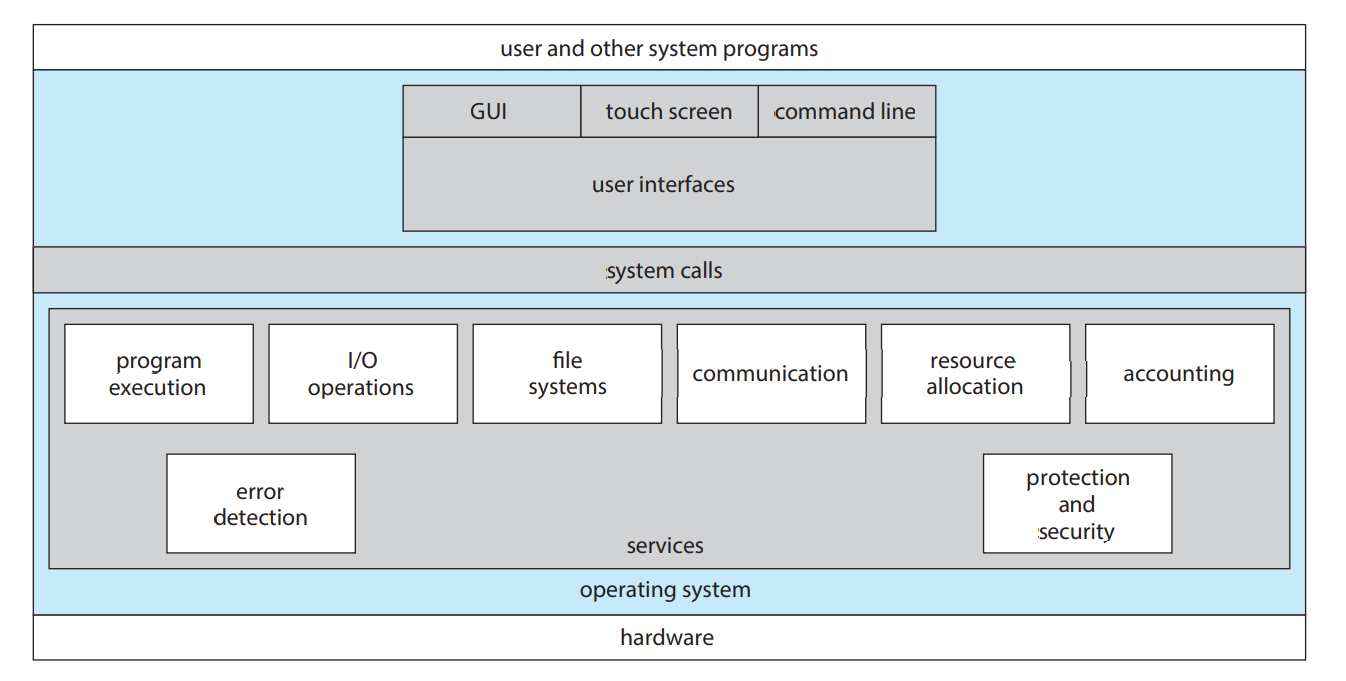

- An OS is a program that acts as an intermediary between a user and the computer hardware

- Goals

- Execute user programs and maker solving user problems easier

- Make the computer system convenient to user

- Use the computer hardware in an efficient manner

- Computer system components

- Hardware: CPU, memory, I/O devices

- OS

- Application programs

- Users: people, machines, other computers

- What the OS does

- OS is a resource allocator: manage all resources

- OS is a control program: control execution of programs

- Resource Management

- Resource abstraction (top-down view)

- Abstract from functionality of hardware, to achieve ease of use and device independence

- Resource sharing (bottom-up view)

- manage contention for resources

- The one program running at all times on a computer is the kernel, everything else is either a system program or an application program

- Resource abstraction (top-down view)

- Key OS concepts

- Processes = programs + state

- Program in execution, also include some state information

- Context switch: change control from one process to another

- Concurrency

- Used for performance

- Programs do not corrupt, programs can achieve goals (fairness, performance,...), do not deadlock

- Memory management

- Each process has access to entire machine

- Abstraction + sharing

- Device management

- Each process has access to all devices

- Abstraction + sharing

- Processes = programs + state

- Overview of machine organization

- CPU: a processor for interpreting and executing programs

- Memory: for storing data and programs

- Controller: mechanism for transferring data from/to outside world, there is a controller for each device

- Buss: set of wires, move data and instructions within units of the

machine

- Buss should contains both data and address

- Primary movement on buss

- CPU

- CPU

- Memory

- CPU

Lecture 02 2022/01/20

- CPU

- CPU = ALU + Control Unit

- ALU (arithmetic logic unit): performs computations

- Control unit: interprets program instructions

- CPU fetches, decodes and executes instructions on the correct data

- Control unit makes sure the correct data is where is needs to be

- ALU: stores and performs operations on data

- CPU understands only assembly language

- ALU

- ALU = Registers + Function unit

- Registers: storage units for data (addresses, PC, operands, ...)

- Registers are fast and expensive

- Cetegories:

- GPR (general purpose registers): set directly by the software, stores data

- SR (status register): set as a side-effect of software

- The amount of registers is the power to 2, typically 32-64

- Each register holds a word, the size of a word is the amount that could be stored in a register, it's also the amount of data that could be transferred by one bus at a time

- Function unit: evaluate arithmetic and logic units

- Operations in FU can affect bits in the SR

- FU is controlled by signals from the CU, so it knows which operation to perform

- Registers and units are connected through the CPU bus, timing of the movement of data is controlled by CPU clock

- Generate assembly language

- Higher level languages are converted to assembly language using a compiler

- Movement of data

- Memory

LOAD - Register

STORE - Memory

READ - Disk

WRITE

- Memory

- System call: request to OS to perform service, change from user mode to kernel mode

- Source code

- Control unit

- PC (program counter): a register for the CPU, stores the address where the program is stored in memory

- IR (instruction register): a register for the CPU, holds instruction currently being executed or decoded

- CU performs the "Fetch-Decode-Execute" cycle after instructions are

loaded into memory by the loader

- Get the instruction whose address is at PC, store the instruction in IR

- Decode instruction in IR to identify operation type and inputs

- Execute instructions with ALU

- Increment PC (by 4 in the 32-bit configuration)and go back to fetch another instruction

- This cycle is controlled by the halt flag. THe halt flag is the

first bit in the SR, when it is set to CLEAR, the bit is set to 0, and

the program runs. When the last line of the program is executed, IR has

the halt instruction, and the halt flag is set to 1, then the program

stops

1

2

3

4

5

6

7PC = <start address>

IR = M[PC]

haltflag = CLEAR

while (haltflag is CLEAR)

Execute (IR)

Increment (PC)

IR = M[PC]

- Booting

- OS is not in ROM, but on the disk

- OS is large

- OS needs to be changed when needed (update)

- BIOS (non-volatile firmware on flash memory of motherboards): part of hardware, initializes hardware and runs the bootstrap loader

- Bootstrap loader: part of software, resides in ROM, it loads OS into memory

- BL algorithm

1

2

3

4

5

6

7R3 := 0 // Size of transferred data

R2 := SIZE_OR_TARGET // Size of OS

while R3 < R2 do

R1 := disk[FIXED_DISK_ADD + R3]

M[FIXED_MEM_ADD +R3] := R1

R3 : R3 + 4

goto FIXED_MEM_ADD

- OS is not in ROM, but on the disk

Lecture 03 2022/01/25

- Assumption and notation

- Assumptions

- 16 GPR (R1, ..., R16)

- 16 SR (S1, ..., S16)

- Instruction size: 4 bytes

- Notation

M[x]: contents of memory at address xD[x]: contents of disk at address xRi: contents of GPR Ri- PC: program counter

<-: assignment

- Assumptions

- Load: transfer data from memory to cpu

- Direct addressing

- Syntax:

LOAD Ri, Addr - Meaning:

Ri <- M[Addr] - Example:

LOAD R1, 3000

- Syntax:

- Immediate operand

- Syntax:

LOAD Ri, =Num - Meaning:

Ri <- Num - Example:

Load R2, =100

- Syntax:

- Index addressing

- Syntax:

LOAD Ri, [Addr, Rj] - Meaning:

Ri <- M[Addr + Rj] - Example:

Load R3, [3000, R4]

- Syntax:

- Indirect addressing

- Syntax:

LOAD Ri, @ Addr - Meaning:

Ri <- M[M[Addr]] - Example:

Load R5, @ 3000

- Syntax:

- Relative Addressing

- Syntax:

LOAD Ri, $Num - Meaning:

Ri <- M[PC + Num] - Example:

LOAD R6, $100,LOAD R7 $R8

- Syntax:

- Direct addressing

- Store: transfer data from cpu to memory

- Direct addressing

- Syntax:

STORE Ri, Addr - Meaning:

M[Addr] <- Ri - Example:

STORE R1, 3000

- Syntax:

- Index addressing

- Syntax:

STORE Ri, [Addr, Rj] - Meaning:

M[Addr + Rj] <- Ri - Example:

STORE R2, [3000, R3]

- Syntax:

- Relative addressing

- Syntax:

STORE Ri, $Num, - Meaning:

M[PC + Num] <- Ri - Example:

STORE R4, $100,STORE R5, $R6

- Syntax:

- Direct addressing

- Arithmetic operations

- ADD

- Syntax:

Add Ri, Rj - Meaning:

Ri <-Ri + Rj

- Syntax:

- SUB

- Syntax:

SUB Ri, Rj - Meaning:

Ri <- Ri - Rj

- Syntax:

- MUL

- Syntax:

MUL Ri, Rj - Meaning:

Ri <- Ri * Rj

- Syntax:

- DIV

- Syntax:

DIV Ri, Rj - Meaning:

Ri <- Ri / Rj(integer division),Rj <- Ri % Rj - Example

1

2

3LOAD R1, = 11

LOAD R2, = 4

DIV R1, R2 // R1 = 2, R2 = 3

- Syntax:

- INC

- Syntax:

INC Ri - Meaning:

Ri <- Ri + 1

- Syntax:

- ADD

- Labels

- Syntax:

LOOP: instruction - This is the same meaning as the instruction alone, but it is used with branch instructions to construct a loop

- If the "LOOP" label appears at memory address 3000, then throughout the code, "LOOP" is equivalent to 3000

- Syntax:

- SKIP

- Syntax:

SKIP - Meaning: time delay, cpu pauses for about 300 cpu cycles

- Syntax:

- Branch

- BR

- Syntax:

BR label - Meaning: branch to label

- Syntax:

- BLT/BGT/BLEQ/BGEQ

- Syntax:

BLT Ri, Rj, label - Meaning: branch to label if Ri < Rj

- Syntax:

- BR

- READ: transfer data from hardware to cpu

- Direct addressing

- Syntax:

READ Ri, DiskAddr - Meaning:

Ri <-D[DiskAddr] - Example:

READ R1, 30000

- Syntax:

- Index addressing

- Syntax:

READ Ri, [DiskAddr, Rj] - Meaning:

Ri <- D[DiskAddr + Rj] - Example:

Read R2, [30000, R3]

- Syntax:

- Direct addressing

- WRITE: transfer data from cpu to hardware

- Direct addressing

- Syntax:

WRITE Ri, DiskAddr - Meaning:

D[DiskAddr] <- Ri - Example:

WRITE R1, 30000

- Syntax:

- Index addressing

- Syntax:

WRITE Ri, [DiskAddr, Rj] - Meaning:

D[DiskAddr + Rj] <- Ri - Example:

WRITE R2, [30000, R3]

- Syntax:

- Direct addressing

- HALT

- Syntax:

HALT - Meaning:

haltflag <- 1

- Syntax:

- Exercise

- Given

1

2

3

4

5

6

7

8

9

10

11

12at x READ R1, d1 // b

at x+ 4 READ R2, d2 // p

at x + 8 LOAD R3, =1 // i

at x + 12 LOAD R4, =4 // step

at x + 16 LOAD R5, =1 // res

at x + 20 LOAD R6, = 972 // initial offset

at x + 24 LOOP: MUL R5, R1 // res *= b

at x + 28 STORE R5, $R6 // M[offset] = res

at x + 32 Add R6, R4 // offset += step

at x + 36 INC R3 // i+= 1

at x + 40 BLEQ R3, R2, LOOP // while i <= p

at x + 44 HALT // stop

- Given

- Binary representation

- Convert decimal number to binary form:

- Convert decimal number to binary form:

- Memory

- Memory is divided into cells, typical cell size is 1 byte = 8 bits, each cell has an address

- If the computer is m-bit (the length of cpu register is m), then it

can be mapped to

- There are three registers used to handle data transaction between

cpu and memory

- MAR (memory address register): stores memory address from which data will be fetched or to which data will be stored

- MDR (memory data register): stores data being transferred to and from memory address specified by MAR, it can both load data and store data

- CMD (command register): indicates whether the command is load or store

- Example

1

2

3

4

5

6

7

8

9STORE R1, 3000

MDR <- R1

MAR <- 3000

CMD <- WRITE

LOAD R1, 3000

MDR <- R1

MAR <- 3000

CMD <- READ

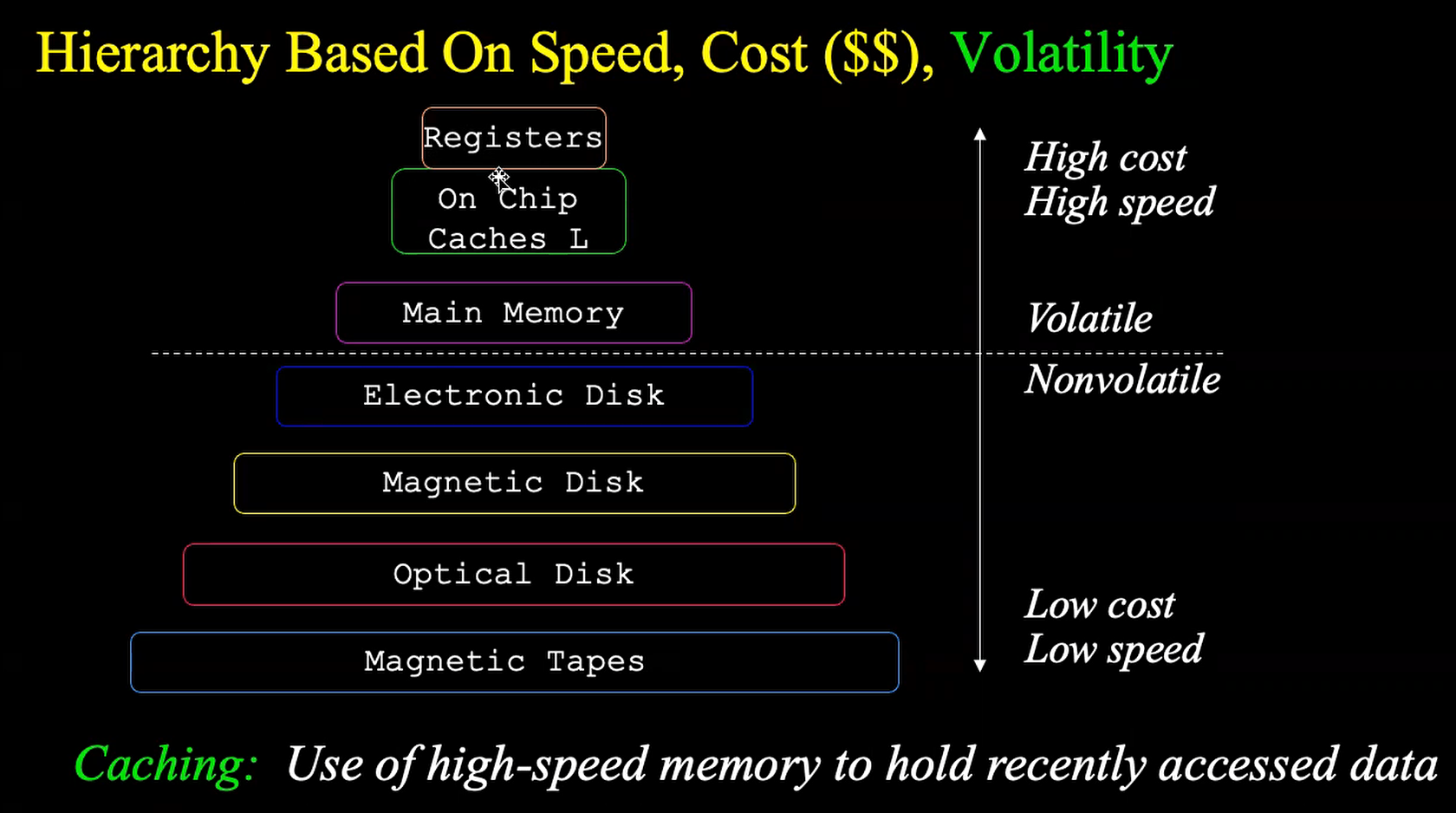

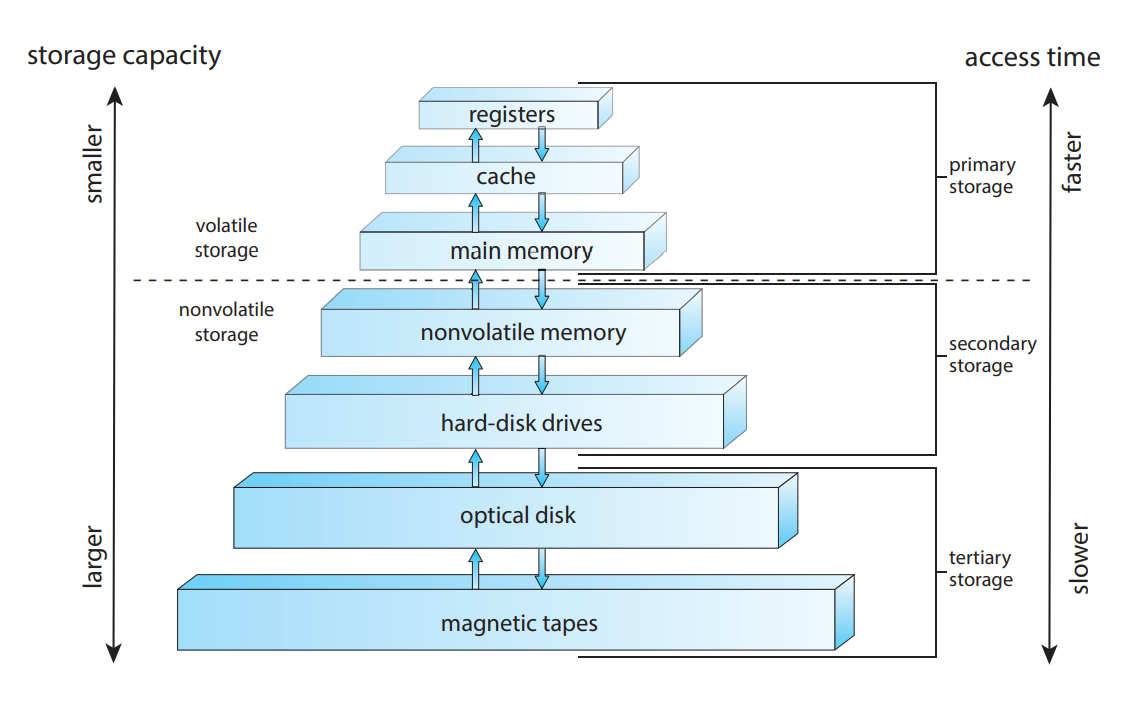

- Memory categories

- Main memory: CPU can access directly, this is volatile (when the computer is shut down, the data in the main memory is lost)

- Secondary storage: extension of main memory that provides large, nonvolatile storage capacity

- Hardware hierarchy

- Devices and controllers

- I/O devices: keyboard, mouse, monitor, printer, disk, ...

- Device controllers: hardware interface between device and bus, handlers the I/O signals of CPU, device controllers are physical chips, but device drivers are softwares

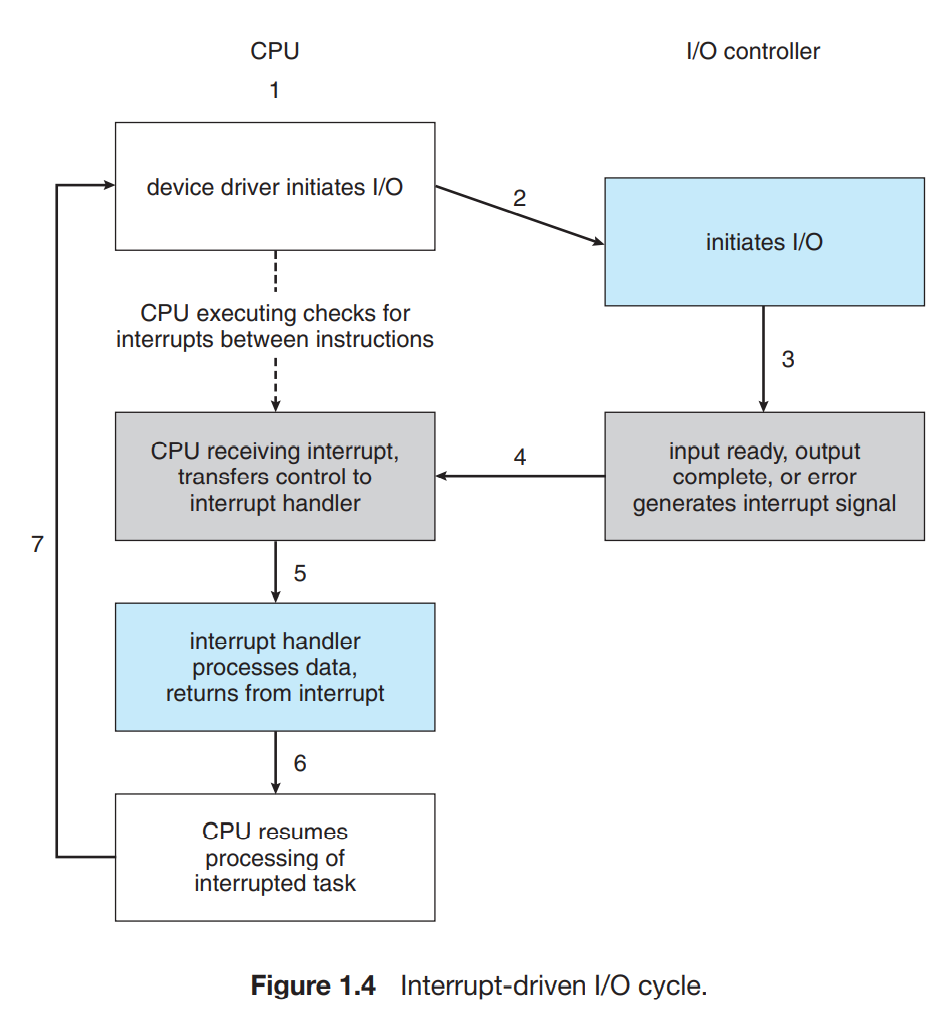

- Interrupts

- One I/O interrupt example:

- CPU issues a read request to the device through the device driver

- Device driver signals the device controller to get the data while CPU does other work

- Device will interrupt the CPU when ready to transfer the data

- CPU transfers control to the device

- Device transfers the data to CPU

- A trap/exception is a software-generated interrupt caused either by an error or a user request

- How interrupt notifies CPU

- Method 1: interrupt request flag(IR flag)

- One status register directly wired to the device controller's DONE flag, when the DONE flag is set to be true, the CPU is notified through the IR flag

- For this approach, if there are multiple devices in the computer, cpu cannot know which one sends the interrupt request, so it needs to ask each device in turn (called polling procedure), which slows interrupt processing

- Method 2: interrupt vectors

- If the length of the interrupt vectors is

- The interrupt vector is part of the hardware

- If the length of the interrupt vectors is

- Method 1: interrupt request flag(IR flag)

- Fetch-decode-execute with interrupt involved

1

2

3

4

5

6

7

8

9

10PC = <start address>

IR = M[PC]

haltflag = CLEAR

while (haltflag is CLEAR)

Execute (IR)

Increment (PC)

if (interrupt request)

M[0] <- PC // reserved to store PC

PC <- M[1]/ PC <- M[i] // if IR flag is used, use M[1] is the interrupt handler, if interrupt vector is used, use M[i] as the interrupt handler for ith device

IR = M[PC]

- One I/O interrupt example:

Lecture 05 2022/02/01

- Respond to interrupt

- Procedures

- Save processor state (PC, registers, ...)

- Identify interrupting device and sets PC to address the appropriate device handler

- Device handler performs data transfer from device controller registers to cpu

- Interrupt during interrupt

- Problem 1: the later interrupt overwrites M[0]

- PC = 108 => 1st interrupt => M[0] <- 108, PC <- M[1]

- 2nd interrupt => M[0] <- M[1], PC <- M[2]

- When the 2nd interrupt id done, PC <- M[1], but we lost the first PC <- 108

- Solution: reserve additional memory for PC values, so different interrupt store their respective PC at different memory addresses

- Problem 2: wrong timing leads to unhandled interrupts

- Two interrupt arrives almost at the same time, then M[0] is set to the PC for the 2nd interrupt, then the 1st interrupt is unhandled

- Solution: set an interrupt disable other interrupts until cpu recognizes which devices are interrupting

- Problem 1: the later interrupt overwrites M[0]

- Procedures

- Kernel vs User mode

- OS and users share the hardware and software resources of a computer system

- OS should be able to protect the integrity of the computer, so OS needs to distinguish between kernel mode and user mode

- Switch between 2 modes of operation: a mode bit on one register:

- Uer mode: execution in user role, limited instructions, no privileged instructions

- Kernel mode: execution in OS role, privileged instructions are allowed, (interrupt, trap, fault are handled in this mode)

- All I/O instructions are privileged, so there is switch between user mode and kernel mode

- To prevent infinite loop/ process hogging resources, there is also transition from user to kernel mode, a timer is involved

- When the program logic is focused, the user does not need to care about which call triggers the switch between modes, but if efficiency is an issue, calls without switch between modes are preferred

- Processes

- Processes are abstraction of the CPU and the main memory

- Process is an abstraction of the cpu

- It gives an illusion of a uni-programming environment, every process acts as if it has exclusive access to cpu and memory (in reality, cpu is time-sliced, memory is space-sliced)

- Multiple processes increase cpu utilization

- Multiple processes reduce latency

- A thread is a lightweight process, it's an abstraction of the cpu, but not of the main memory

- Threads are mostly cooperative, processes are mostly independent

- A process is more than the program code, it consists of code section, data section, stack, registers(include the PC)

Lecture 06 2022/02/04

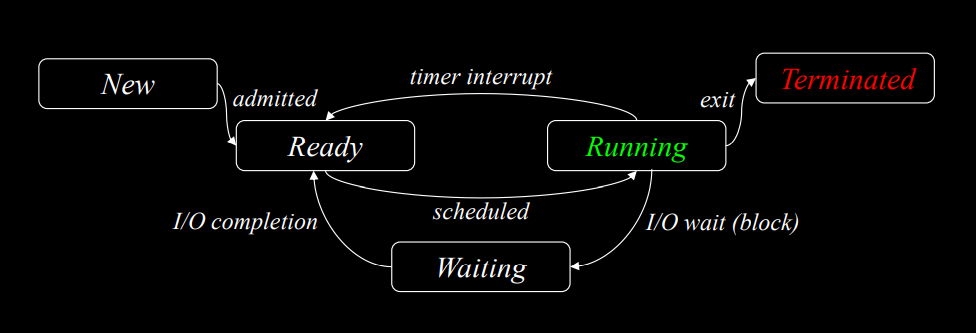

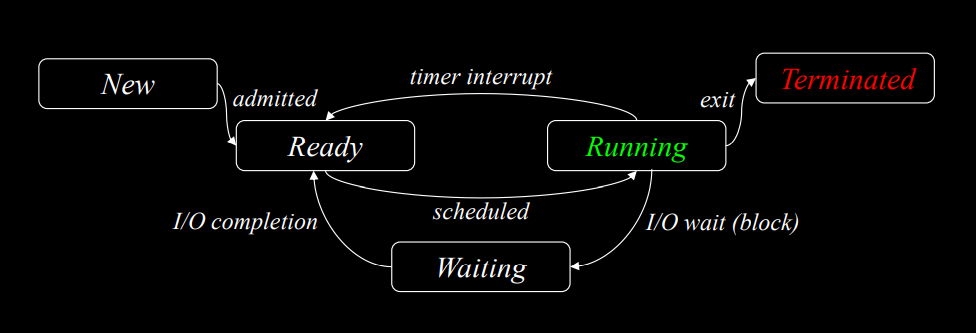

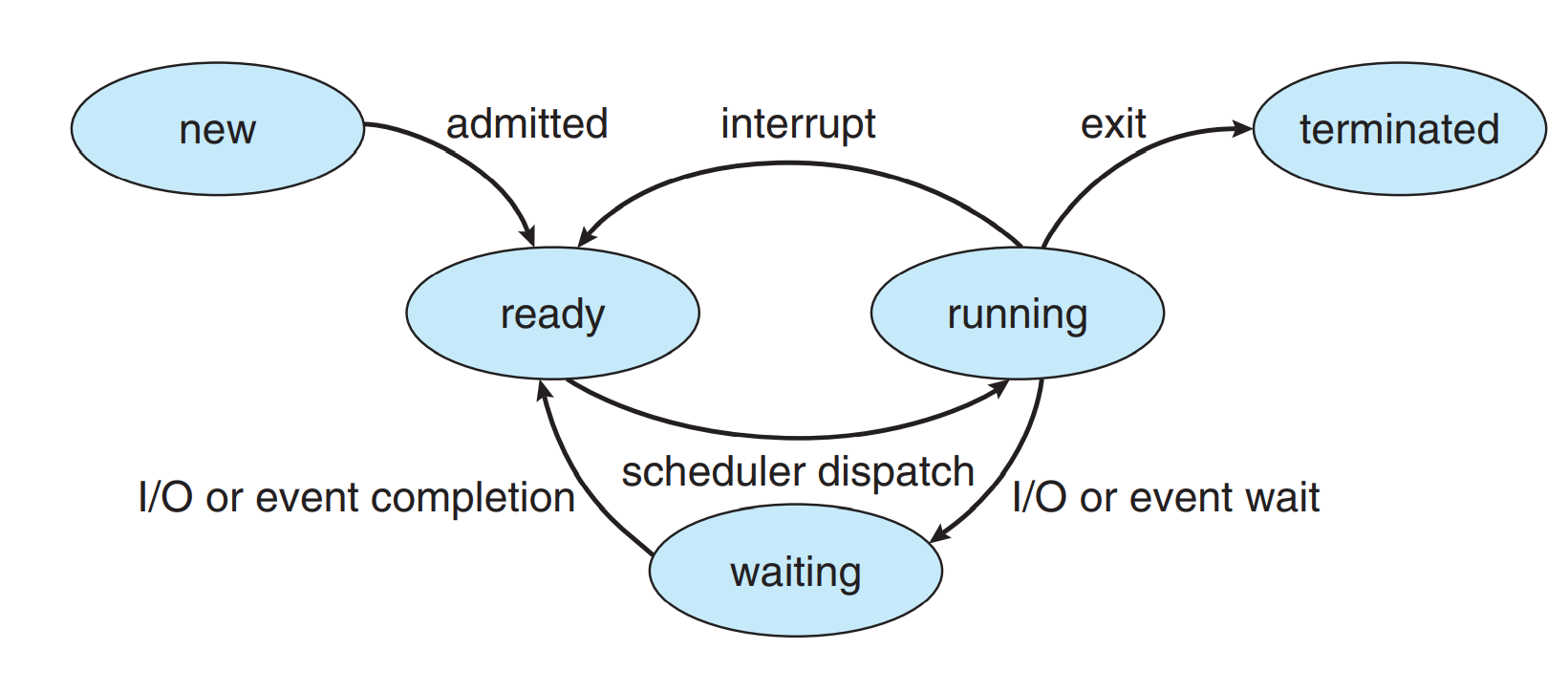

- Process state

- Processes are not always running, they have different states

- State:

- New: process is being created

- Running: instructions are being executed

- Waiting: process is waiting for some event to occur

- Ready: process is waiting to be assigned the cpu

- Terminated: process has finished execution

- Process control block

- Each process is represented in the os by a process control block, also called a task control block. It contains many information associated with a specific process

- Information

- Process state: new, running, etc.

- Process id

- PC

- Registers

- Memory limits

- Other information: list of opened files, etc.

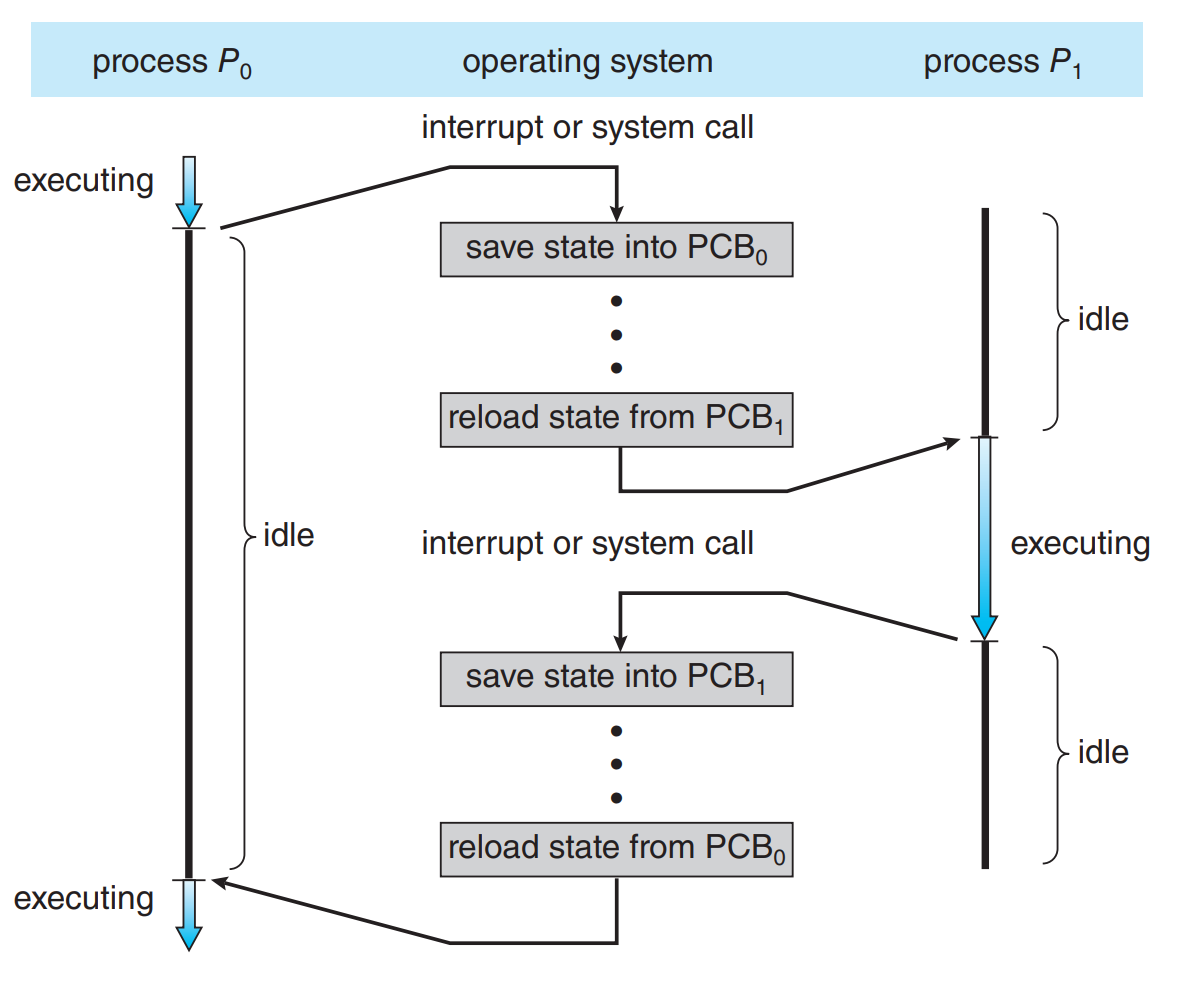

- Context switch:

- Process with access to cpu switched out (due to interrupt or system call), with state copied to pcb, another ready process switched in, also with state copied from pcb

- There is a queue managing processes, if a process is switched out, then it's enqueued, then another process is dequeued from the scheduling queue based on some criterion and switched in

- Pure overhead: during context switching, no useful work is done in cpu

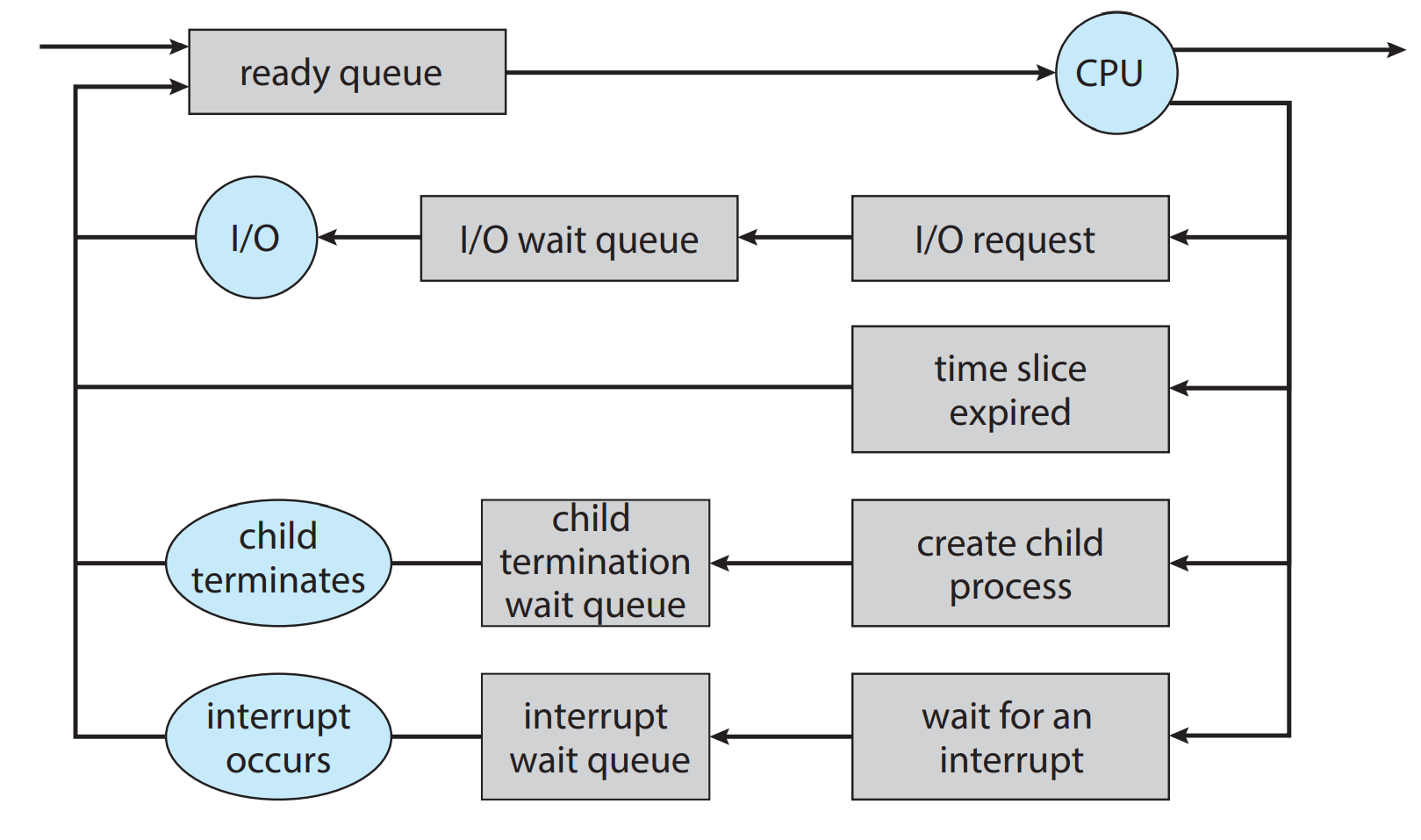

- Process scheduling

- Multiprogramming goal: maximize cpu utilization and enable users

interact with their running programs. This is achieved by process

scheduler, it selects the progress for program execution on the cpu

- Scheduler

- Long term scheduler (job selector): moves process from disk to main

memory

- Select which processes should be moved into ready queue

- Control degree of multiprogramming (# of processes in memory)

- Invoked infrequently, can be slow

- Need to make a mix of I/O bound processes (spend more time doing I/O)and cpu bound processes (spends more time doing computation)

- Short term scheduler: selects one process from memory and allocate

the cpu to it

- Similar to interrupt handler

- Select processes from ready queue

- Invoked frequently, should be fast

- Long term scheduler (job selector): moves process from disk to main

memory

- Multiprogramming goal: maximize cpu utilization and enable users

interact with their running programs. This is achieved by process

scheduler, it selects the progress for program execution on the cpu

- Process and memory

- Process address space contains text segment, data segment and stack segment

- Abstraction

- The process has access to whole memory, in reality memory is partitioned amongst processes

- Memory is contiguous, in reality memory consists of non-contiguous chunks (if a process is terminated, its memory is free, then there are empty chunks)

- Abstraction 1: process has exclusive access to all memory that it

can address

- Exclusivity: each process is assigned a base register and limit register defining its address space, so the memory space is [base register, base register + limit register]

- Access to entire address space: in reality, the entire memory space occupied by processes can be larger than physical memory using the virtual memory technique

- Abstraction 2: a process's address space is contiguous

- Store process's address space as non-contiguous set of contiguous chunks

- Process creation

- Process creation

- Parent process creates child processes, forming a tree of processes

- Child process possibilities

- Resource sharing: child process shares all sources from parent process, subset of parents's, none resource with the parent

- Execution: parent executes concurrently with children processes, parent process waits until all children processes are terminated

- Address space: child is a duplicate of parent, child has specified new program loaded

- Process creation in UNIX:

fork(),execv(path, argv)fork()- Creates new child processes by creating a copy of executing process within new address space, return 0 to newly created child process and return the pid of the child process to the parent

- Shared memory are shared between parent and child processes, stack and heap are copied between parent and child processes

execv(path, argv): replaces the process memory space with respect to specified program in path and arguments argvExample 1

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18int vaule = 5;

int main() {

pid_t pid;

pid = fork();

if (pid == 0) {

/*Only child executes*/

value += 15;

return 0;

} else if (pid > 0) {

/*Only parent executes*/

wait(NULL);

/*This should print 5*/

printf("Parent: value = %d\n", value);

return 0;

}

}Example 2

1

2

3

4

5int main() {

fork();

fork(); /*Both child and parent execute this*/

fork(); /*Both child and parent execute this*/

}

- Process creation

Lecture 07 2022/02/07

- Processes

- The process id for child is larger thant that of the parent process, but may not be consecutive

- They have separate copies of data and separate PC

- Global variables are the same after copy, but if one processes changes it, other processes cannot notice the change

- Process termination

- Exit: When a process executes last statement and asks OS to kill it

- Abort: parent process terminates execution of child process

- Threads

- Every thread has its own registers, PC and stack, code and data can be shared

- Motivation for threads

- Multi-processing is expensive, processes have separate memory, so each time all state information needs to be copied

- Multi-threading is cheaper, only stack, PC, registers need to be copied

- Benefits or threads

- Responsiveness: interactive program can keep running even if one thread blocks

- Resource sharing: code, address space sharing comes for free with threads, for processes, code copying required for former, IPC for latter

- Economy: slightly cheaper to context switch, much cheaper to create

- Utilization of multiprocessors, better parallelism and scalability

- Thread Control Blocks (TCB)

- Break the PCB into two pieces:

- Information related to process execution in TCB: thread register, stack pointer, cpu registers, cpu scheduling information, pending I/O information

- Other information associated with process: memory management information (heap, global variables), accounting information

- Break the PCB into two pieces:

- User threads v.s. kernel threads

- Kernel thread: managed by OS with multi-threading architecture, it's the unit of execution that is scheduled by the kernel on the cpu

- User thread: implemented with treads library, supported above the kernel and are managed without kernel support

- The mapping between user thread and kernel thread can be many-to-one, one-to-one and many-to-many

- Pros and cons

- User thread

- Pros

- Can be implemented on an os that does not support threads

- Thread switching is faster trapping to the kernel

- Thread scheduling is very fast

- Each process can have its own scheduling algorithm

- Cons

- Blocking system calls (if one thread sends a system call, the os does not know the process has multi-threading, so the whole process is blocked)

- Page faults

- Threads need to voluntarily give up the cpu for multiprogramming

- Pros

- Kernel thread

- Pros

- No run-time system needed in each process

- No thread table in each process

- Blocking system calls are not a problem (kernel knows there is multi-threading in the process, so cpu scheduler assigns another thread to run)

- Cons

- If thread operations are common, much kernel overhead will be incurred

- If a signal is sent to a process, should the process handles it or assigns a thread to handle it?

- Slower that user-space threads

- Pros

- User thread

- Thread library in Java

- Threads in Java are managed by JVM, mapping to kernel threads depends on the OS

- Thread creation

- Extends

Threadclass, overriderun()method1

2

3

4

5

6

7

8

9

10

11

12

13

14class MyThread extends Thread {

public void run() {

// code here

}

}

public class Test{

public static void main(String[] args) {

Thread thread = new MyThread();

thread.start();

}

} - Implements

Runnableinterface, overriderun()method1

2

3

4

5

6

7

8

9

10

11

12

13class MyThread implements Runnable {

public void run() {

// code here

}

}

public class Test {

public static void main(String[] args) {

Thread thread = new Thread(new MyThread());

thread.start();

}

}

- Extends

Lecture 08 2022/02/10

- Status of a thread

- init, run, blocked, dead

- Interaction between parent and child thread

- Execute concurrently: call

child.start()in the parent thread - Parent suspend until child is done: call

child.join()in the parent thread, noticejoinmethod can throwInterruptedexception, so you should use a try-catch clause. Noticechild.start()should be called beforechild.join()is called - Example

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25class MyThread implements Runnable {

public void run() {

while (true) {

if (Thread.currentThread().isInterrupted()) {

System.out.println("Child is interrupted!");

break;

}

}

}

}

public class Test {

public static void main(String[] args) {

Thread thread = new Thread(new MyThread());

thread.start();

try {

thread.interrupt();

thread.join();

} catch(InterruptedException e) {

System.out.println("Parent interrupts child!");

}

System.out.println("Parent is done!")

}

}

- Execute concurrently: call

- Cancel threads

- Call

child.interrupt()to interrupt the child thread, it sets the interrupt flag in the child thread Thread.currentThread().isInterrupted()method in the child thread: returns boolean valueinterrupted()method in the child thread: returns boolean value, and clears the interrupt flag

- Call

- Inter-process communication

- Most modern Os does not support inter-process communication

- Independent processes are not affected by execution of other processes

- Communication methods

- Message passing: direct/indirect messaging, asynchronous/synchronous messaging

- Shared memory

- Producer-consumer problem

- Buffer size: unbounded/bounded

- Reader/writer problem

- Mutual exclusion problem

Lecture 09 2022/02/15

- Scheduling

- Multiprogramming goal: avoid cpu idle time, some process is using the cpu all the time

- Typical process execution flow: cpu burst and I/O burst

- CPU-bound and I/O-bound process

- Two types of scheduling:

- No-preemptive : processes only give up cpu voluntarily

- Preemptive: processes also may be preempted by an interrupt

- Clock interrupt

- This is how system keeps track of time, the interrupt happens at periodic intervals

- This is a hardware system clock on the motherboard, it's not the same as the cpu clock

- The clock interrupt has very high priority

- Scheduling policies

- Criteria for effectiveness of scheduling policies

- Throughput

- Measured in amount of processes completed/ time unit

- Turnaround time

- Turnaround time = process completion time - process creation time(arrival time)

- Turnaround time = waiting time + execution time

- Waiting time

- Waiting time = waiting time in the ready queue

- If a process is preempted, then the waiting time is the sum of all time waiting time

- Compared with turnaround time, waiting time does not have a penalty for processes with long execution time

- Response time

- Response time = time of 1st response is produced- time of creation

- Overhead

- Time spent related to scheduling

- Fairness

- How much variation in waiting time, want to avoid process starvation

- Dispatch time

- The time it takes to choose next running process

- Dispatch time = schedule time + context switch time

- Throughput

- Generally it's not possible to meet all performance criteria for one scheduling policy

- Measures

- CPU utilization: the higher, the better

- Throughput: the higher, the better

- Turnaround time: the lower, the better

- Waiting time: the lower, the better

- Response time: the lower, the better

- Criteria for effectiveness of scheduling policies

- First Come First Serve(FCFS)

- Idea: processes are served according their arrival order

- This is a non-preemptive scheduling policy

- Pros:

- Easy to implement

- No starvation, so it's fair

- Cons

- Convoy effect: order of arrival determines performance. If a long process arrives first, then the average turnaround time, average waiting time will be long

Lecture 10 2022/02/17

- Shortest Job First

- Idea:

- Non-preemptive version: If processes arrive at the same time, choose the one with the minimal cpu burst execute first. If processes arrive at different time, whenever a process finishes, select from the arrived processes the one that has the minimal cup burst

- Preemptive version: currently running process can be preempted if new process arrives with shorter remaining CPU burst

- A problem: in reality, we do not know the amount of cpu burst, so we

need to use prediction

- Equation:

- If

- If

- Equation:

- Pros

- Optimal(minimal) for average turnaround time and for average waiting time

- Cons

- Starves processes with long cpu bursts

- It's hard to predict cpu burst time

- Idea:

- Priority-Based

- Idea: the process withe the highest priority is executed first

- SJF can be seen as a special case for priority-based scheduling in that processes with shorter cpu burst are assigned higher priorities

- Priority-based scheduling can be either non-preemptive or preemptive

- Pros

- Reflects relative importance of processes

- Cons

- Can cause starvation of low priority processes (can use aging to tackle this problem)

- Round-robin

- Each process gets a small unit of cpu time (time quantum), after quantum has elapsed, the running process is preempted and added to the end of ready queue. If a process finished in one time quantum, the next process starts immediately

- This is a preemptive only scheduling policy

- If there are n processes, and time quantum is q, then in one round, each process is executed for at most q/n time, each process waits up to (n - 1)q/n time

- If the time quantum is too large, then Round-robin becomes FCFS, if the time quantum is too small, then there will be too many context switch overheads

- Pros

- Fairness

- Lower response time than SJF

- Cons

- High context switch overhead

- Higher turnaround time than SJF

- Multilevel Queue

Idea: ready queue is partitioned into separate queues, each with its own scheduling algorithm

Inter-queue scheduling

- Fixed priority: each queue has its own priority, high priority queues are served before low priority queues, this may lead to starvation in low priority queues

- Time-slice: each queue gets a reserved slice of cpu time to schedule amongst its processes (e.g. 80% for higher priority queue, 20% for lower priority queue, this can be achieved using round-robin inter-queue scheduling algorithm, and use randomization with lottery system to achieve the respective percentage)

In UNIX, there are 32 queues, 0-7 are system queues, 8-31 are user queues. User processes can be moved between different user queues by changing its priority level

Multilevel Feedback Queue (MFQ)

Processes can move between queues

Use MFQ to mimic SJF

- Higher priority queue uses RR with low time quantum (short jobs can finish here, other jobs are downgraded to lower priority queues after short time quantum elapses)

- Lower priority queue uses RR with high time quantum

- Processes downgrade their priorities when they get older

Example:

Process Burst Time Arrival Time P1 125 0 P2 50 0 P3 500 0 P4 175 0 P5 200 250 P6 50 250

The result is

Process Arrival Time Q1 Q2 Q3 Completion Time Turnaround Time P1 0 0-75 400-450 450 450 P2 0 75-125 0 125 125 P3 0 125-200 450-600 825-1100 1100 1100 P4 0 200-275 600-700 700 700 P5 250 275-350 700-825 825 575 P6 250 350-400 400 150

Lecture 11 2022/03/01

- Real-time scheduling

- Real-time means process must/should complete by some deadline

- Hard real-time

- Definition: process must complete by some deadline, this requires dedicated scheduler

- Idea:

- Determine feasible processes(deadline - current time

- Greedy heuristic: choose process with min value of [deadline - current time - cpu burst]

- Determine feasible processes(deadline - current time

- Soft real-time

- Definition: process should complete by some deadline, this can be integrated into Multi-level feedback queues

- Idea: processes should not be demoted, requires strict time-slices

- Scheduling examples

- Windows

- Windows uses priority-based, doubly preemptive scheduling, preemption occurs with both end of time quantum and arrival of higher-priority thread

- Thread priority has different components

- Statically assigned priority class

- Dynamically assigned relative priority (expiration of time quantum, satisfaction of I/O)

- FG processes are given 3 times priority of the BG ones

- Windows 7 add user-mode scheduling(UMS): applications create and manage threads independent of kernel

- Linux

- Linux has two classes of processes: real-time processes and time-shared processes

- Real-time processes: have soft deadline, have highest-priority, are within same priority class, use FCFS or RR as scheduling policy

- Timesharing processes: default ones, have credits(similar to aging), initialize credits based on priority and history, lose credits when time-sliced out, scheduler chooses process with the most credits

- Java JVM

- Java uses preemptive, priority-based scheduling. Preemption happens at the arrival of higher priority thread

yield(): running thread yields to another thread of equal priority- Thread priorities:

Thread.MIN_PRIORITY,Thread.MAX_PRIORITY,Thread.NORM_PRIORITY

- Windows

- Synchronization overview

- Idea: processes, threads use shared data access to communicate. Shared data access can result in data inconsistencies and unpredictable processes/threads behaviors. So synchronization is needed to prevent that

- Definition

- Race condition: outcome of thread execution depends on timing of threads

- Synchronization: use of atomic operations to ensure correct cooperation amongst threads

- Mutual exclusion: ensure that only one thread does a particular thing

- Critical section: piece of code that only on thread can execute at any time (if multiple threads access critical section, there will be data inconsistencies)

- Lock: construct that prevents someone from doing something

- Starvation: occurs when one or more threads never gets access to critical section

Lecture 12 2022/03/04

- The milk problem: suppose there is not milk, and we want only one

bottle of milk

- Solution 1

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17// Person A

if (noMilk) {

if (noNote) {

leave note;

buy milk;

remove note;

}

}

// Person B

if (noMilk) {

if (noNote) {

leave note;

buy milk;

remove note;

}

}- This one does not work, think of context switch after the second if, both will leave a note and buy a bottle of milk, then there will be two bottles of milk in the end

- Solution 2:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17// Person A

leave noteA;

if (noNoteB) {

if (noMilk) {

buy milk;

}

}

remove noteA;

// Person B

leave noteB;

if (noNoteA) {

if (noMilk) {

buy milk;

}

}

remove noteB;- This one does not work, think of the situation A starts first, there is a context switch after the first if to B, and another switch back to A after executing the first line of B, then neither of A or B will buy a bottle of milk

- Solution 3:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20// Person A

leave noteA;

// Denote this line X

while (noteB) {

skip;

}

if (noMilk) {

buy milk;

}

remove noteA;

// Person B

leave noteB;

// Denote this line Y

if (noNoteA) {

if (noMilk) {

buy milk;

}

}

remove noteB;- This one works

- X and Y are lines that after which critical sections are executed, so we think of situations here

- At Y:

- If noNoteA: A has not start, or A has already bought milk, B can buy milk if no milk, or does not buy milk if there is milk

- If noteA: A is waiting B to quit, or A is buying milk, so B does buy and quit

- At X:

- If noNoteB: B has not start, or B has ready bought milk, A can buy milk if no milk, or does not buy milk if there is milk

- If noteB: B is waiting A to quit, or B is buying milk, so A waits B to remove note, then checks if there is milk to decide whether to buy or not

- Disadvantages:

- This approach is complicated

- This is not a symmetric solution, code needs to be different for each process, can not be generalized to multiple processes situations easily

- A is busy waiting

- A is favored: if A and B have different goals, A is more favored than B

- This one works

- Better solutions

- Have hardware provide better primitives than atomic LOAD, STORE

- Build higher-level programming abstraction on above hardware

instructions, for example: lock with atomic

acquire()andrelease() - Milk solution example

1

2

3

4

5

6// Person A and Person B

lock.acquire();

if (noMilk) {

buy milk;

}

lock.release();

- Solution 1

- The critical section problem

- Idea: only one thread is executing the critical section at a time to avoid race condition

- Examples

- Milk synchronization:

if (noMilk) {buy milk} - Bank transfer synchronization:

acc1 -= amount; acc2 += amount; - Producer consumer problem:

- Problems in the producer's method

1

2

3

4

5

6

7

8

9

10public void insert(Object item) {

while (count == size) {

skip;

}

count ++;

// If context switch happens here, then consumer thinks there is one

// more items than there actually is

buffer[in] = item;

in = (in + 1) % size;

} - Additional problems:

count++/count--1

2

3

4// There can be context switches between these three instructions

LOAD R1, count

INC R1 // DEC R1 for count--

STORE R1, count

- Problems in the producer's method

- Milk synchronization:

- For efficiency consideration, it's important to identify the exact lines of code that is the critical section

- General form of threads

1

2

3

4

5

6while (true) {

entry code;

critical section;

exit code;

non-critical section;

} - General solution to critical section problems

- Clever algorithms

- Hardware-based solutions

- Hardware-based solutions + software abstractions

- Goals of solution

- Mutual exclusion

- Progress: no thread outside of its critical section should block other threads

- Bounded waiting: if a thread has already made a request to enter its critical section, then the amount of times other threads should only enter their critical sections for a bounded amount of times

- Software solution to critical section problems

- Strict alternation

- Idea: each thread take turns to get into its critical section

- Pseudocode

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19shared int turn;

// Thread A

while (true) {

while (turn != 0) {

skip;

}

critical section;

turn = 1;

non-critical section;

}

// Thread B

while (true) {

while (turn != 1) {

skip;

}

critical section;

turn = 0;

non-critical section;

} - Strict alternation satisfies both mutual exclusion, bounded waiting, but does not satisfy progress

- Disadvantage:

- Does not satisfy progress

- Use busy waiting, waste lots of resources

- Faster threads can get blocked by slower ones

- After you

- Idea: thread specifies interest in entering critical section, and can only enter if other thread is not interested

- Pseudocode

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21shared boolean flag0, flag1;

//Thread A

while (true) {

flag0 = true;

while (flag1) {

skip;

}

critical section;

flag0 = false;

non-critical section;

}

//Thread B

while (true) {

flag1 = true;

while (flag0) {

skip;

}

critical section;

flag1 = false;

non-critical section;

} - After you satisfies mutual exclusion, but does not satisfy progress and bounded waiting

- Strict alternation

Lecture 13 2022/03/10

- Software solution to critical section problems

- Peterson's algorithm

- Idea: combination of strict alternation and after you, threads take turns to get into critical section but a thread skips its turn if it's not interested and another thread is

- Pesudocode

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20shared int turn;

shared boolean flag0, flag1;

// Thread A

flag0 = true;

turn = 1;

while (turn == 1 && flag1) {

skip;

}

critical section;

flag0 = false;

non-critical section;

// Thread B

flag1 = true;

turn = 0;

while (turn == 0 && flag0) {

skip;

}

critical section;

flag1 = false;

non-critical section; - Peterson's algorithm satisfies mutual exclusion, progress and bounded waiting

- Peterson's algorithm

- Hardware solutions to critical section problems

- Disabling interrupts

- Idea: disable interrupts before entering critical section and enable interrupts after exiting critical section

- Pseudocode

1

2

3

4

5

6

7// All threads

while (true) {

disable interrupts;

critical section;

enable interrupts;

non-critical section;

} - Comments

- Advantages: this solution is easy to implement

- Disadvantages:

- Overkill: disables all interrupts(I/O), disallows concurrency with threads in non-critical sections

- Does not work for multi-core systems, because each cor has its own interrupts, so threads on different cores can access their critical sections at the same time

- Test and set

- An assembly command called TS(test and set), it's an atomic operation

- What does

TS(i)do- Save the value of

Mem[i](Mem[i] stores a boolean value of a lock) - Set

Mem[i] = True - Return the original value of

Mem[i]

- Save the value of

- If TS(i) returns True, then the lock is locked, so we cannot access the critical section

- Solve the critical section problem with TS

1

2

3

4

5

6

7

8

9shared bool lock = false;

while (true) {

while (ts(lock)) {

skip;

}

critical section;

lock = false;

non-critical section;

} - Comments

- Advantages: easy to use for critical sections, not easy to use for other synchronization problems

- Disadvantages: busy waiting is involved, can be expensive on multi cores

- Semaphores: software abstraction of hardware

- Idea: a semaphore is a data structure consisting of an integer lock

with a waiting queue, it provides thread safe operations

init(n): n threads are allowed access to resource at a timeacquire(): thread code calls to gain accessrelease(): thread code calls to relinquish access

- Binary semaphore: n = 1, only one thread can hold lock at a time

- Counting semaphore: n threads can hold lock at a time

- A semaphore can be either unlocked or locked by a thread with other threads waiting in the queue

- Critical section solved with binary semaphore

1

2

3

4

5

6

7shared binary semaphore S = 1;

while (true) {

acquire(S);

critical section;

release(S);

non-critical section;

}

- Idea: a semaphore is a data structure consisting of an integer lock

with a waiting queue, it provides thread safe operations

Lecture 14 2022/03/15

- Bounded buffer problem

- Shared buffer between producer thread and consumer thread

- Terminology

- buffer: circular queue, shared by all threads

- in: a pointer that points to next spot to insert into buffer, shared by all producer threads

- out: a pointer that points to next spot to remove from buffer, shared by all consumer threads

- Full buffer: ensure producer does not try to insert into a full buffer (wait until not full)

- Empty buffer: ensure consumer does not try to remove from an empty buffer (wait until not empty)

- Semaphores (buffer size N)

empty: measure the amount of empty spots in the buffer, initialized to be N, shared by all producers, locked after N producer threads acquire the the semaphore (which means the buffer is full)full: measure the amount of full spots in the buffer, initialized to be 0, shared by all consumers, unlock after 1 producer thread release the semaphore (which means the buffer is not empty, so the consumer can start consuming)mutex: binary semaphore, shared by all threads, used to ensure atomic update of shared variables

- Implementation

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30Semaphore mutex = new Semaphore(1);

Semaphore empty = new Semaphore(N);

Semaphore full = new Semaphore(0);

int in = 0;

int out = 0;

T[] buffer = new CircularQueue<T>(N);

//producer thread

while (true) {

empty.acquire();

// This line should be after empty.acquire(), because if you acquire mutex first

// and the buffer is full, then there is no way for consumers to acquire the mutex

// and increase the empty semaphore

mutex.acquire();

buffer[in] = new T();

in = (in + 1) % N;

// This line can be after the release of the full semaphore, but efficiency is

// reduced then

mutex.release();

full.release();

}

// consumer thread

while (true) {

full.acquire();

mutex.acquire();

T item = buffer[out];

out = (out + 1) % N;

mutex.release();

empty.release();

} - Bounded buffer with only one binary semaphore and spin lock

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21public void insert (T item) {

mutex.acquire();

while (count === N) {

mutex.release();

// Context switch can happen here, allowing consumers to remove

mutex.acquire();

}

// Insert goes here

}

public T remove() {

T item;

mutex.acquire();

while (count == 0) {

mutex.release();

// Context switch can happen here, allowing producers to insert

mutex.acquire();

}

// Remove goes here

return item;

} - The single mutex and spin lock approach does eliminate inconsistencies, but it involves busy waiting and waste of CPU resources

Lecture 15 2022/03/17

- Readers-writers problem

- Idea:

- A buffer of updatable data

- Two type of threads: readers and writers

- Synchronization rules: can allow access of either any number of readers at a time, or exactly one writer

- Database example

- Read is query: queries do not change contents of database, several can execute concurrently

- Write is update: if an update is taking place, it cannot allow either reads or other writes

- Approach 1: a reader-favored solution

- Idea

rcount: the number of readers that is currently reading the databasemutex: a binary semaphore that is used to guard the modification of rcount among readersrwlock: a binary semaphore shared among readers and writers- It can be acquired by a writer to ensure that no other writer is updating the databsae concurrently

- It can be acquired by the first reader to ensure that no writer is updating the database when there are readers reading the database

- It should be released by the last reader to allow writers to update the database

- Implementation

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35// Shared variables initialization

int rcount = 0;

Semaphore mutex = new Semaphore(1);

Semaphore rwlock = new Semaphore(1);

// Writer thread

while (true) {

// If this acquire method does not stuck, it means no other writer is updating

// the database and no other readers are reading the database

rwlock.acquire();

// Update the database

rwlock.release();

}

// Reader thread

while (true) {

// Use mutex to ensure exclusive access to rcount

mutex.acquire();

rcount ++;

// If the reader is the first one, then it should not let writers update the

// database, so it should acquire the rwlock

if (rcount == 1) {

rwlock.acquire();

}

mutex.release();

// Read database

// Use mutex to ensure exclusive access to rcount

mutex.acquire();

rcount --;

// If the reader is the last one, then it should release the rwlock to let

// writers run

if (rcount == 0) {

rwlock.release();

}

mutex.release();

} - Performance: Give high throughout (there can be many readers active at a time), but bounded wait not satisfied (the writers may wait indefinitely)

- This is a readers-favored solution: as long as the writer starts after the 1st reader, it needs to wait for all readers to finish to run

- Idea

- Approach 2: a writers-favored solution:

- Idea

rcount,wcount,mutex1for rcount,mutex2for wcount,rlock,wlock- Implementation

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49// Shared variables initialization

int rcount = 0;

int wcount = 0;

Semaphore mutex1 = new Semaphore(1);

Semaphore mutex2 = new Semaphore(1);

Semaphore rlock = new Semaphore(1);

Semaphore wlock = new Semaphore(1);

// Reader thread

while (true) {

// Use wlock to wait writers to finish updating the database

wlock.acquire();

mutex1.acquire();

rcount ++;

if (rcount == 1) {

// Lock the database so no update can happen during writing

rlock.acquire();

}

mutex1.release();

wlock.release();

//Read database

mutex1.acquire();

rcount --;

if (rcount == 0) {

rlock.release();

}

mutex1.release();

}

// Writer thread

while (true) {

mutex2.acquire();

wcount ++;

if (wcount == 1) {

wlock.acquire();

}

mutex2.release();

// If there are readers reading the database, then wait; if not, block all

// subsequent readers

rlock.acquire();

// Update database

rlock.release();

mutex2.acquire();

wcount --;

if (wcount == 0) {

wlock.release();

}

mutex2.release();

} - Performance: the writers can run ASAP, but readers can wait indefinitely

- Idea

- Idea:

- Dining Philosopher

- Idea

- N philosophers sit a table with N chopsticks, each philosopher shares 2 chopsticks with her left and right neighbor

- Each philosopher thinks for a while, gets hungry then tries to grab both chopsticks next to him, and eats then releases chopsticks

- The question is how to serve dishes for the philosophers

- Solution 1: ensure only one philosopher grabs a given chopstick

- Implementation

1

2

3

4

5

6

7

8

9

10

11

12// Shared variables initialization

// An array of N binary semaphores

Semaphore[] chopsticks = new Semaphore[N];

// For philosopher i thread

while (true) {

chopsticks[i].acquire();

chopsticks[(i + 1) % N].acquire();

// Eat

chopsticks[i].release();

chopsticks[(i + 1) % N].release();

// Think

} - Performance: this may result in a deadlock

- Implementation

- Solution 2: ensure only one philosopher grabs both her chopsticks at

a time

- Implementation

1

2

3

4

5

6

7

8

9

10

11

12

13

14// Shared variables initialization

Semaphore mutex = new Semaphore(1);

Semaphore[] chopsticks = new Semaphore[N];

// For philosopher i thread

while (true) {

mutex.acquire();

chopsticks[i].acquire();

chopsticks[(i + 1) % N].acquire();

mutex.release();

// Eat

chopsticks[i].release();

chopsticks[(i + 1) % N].release();

// Think

} - Performance: this does not result in a deadlock, but philosophers that are not eating (not in the critical section) can block following philosophers (when she acquires the mutex, but is blocked by acquiring chopsticks)

- Implementation

- Solution 3: synchronize philosophers states (hungry, eating,

thinking) rather than synchronizing chopsticks

- Implementation ## Lecture 16 2022/03/22

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30// Shared variables initialization

// states[i] can be hungry, eating and thinking

State[] states = new State[N];

// if phils[i] is locked, she cannot eat, if phils[i] is unlocked, she can eat

Semaphore[] phils = new Semaphore[N];

// Use mutex to avoid race condition in states

Semaphore mutex = new Semaphore(1);

void seekToEat(i) {

if (states[i] == hungry && states[(i - 1) % N] != eating && states[(i + 1) % N] != eating) {

states[i] = eating;

phils[i].release();

}

}

// For philosopher i thread

while (true) {

mutex.acquire();

states[i] = hungry;

seekToEat(i);

mutex.release();

phils[i].acquire();

// Eat

mutex.acquire();

states[i] = thinking;

seekToEat((i + 1) % N);

seekToEat((i - 1) % N);

mutext.release();

// Think

}

- Implementation

- Idea

- Alternatives to Semaphores: Monitors

- Performance of semaphores

- Semaphores accomplishes two tasks:

- Mutual exclusion of shared memory (mutex)

- Scheduling constraints: down and up(counting semaphore)

- If the down and up are scatter among several processes, problems may

occur

- If the order of down is wrong, deadlocks may occur

- If the order of up is wrong, performance may be poor

- We want something that is simple and easy

- Semaphores accomplishes two tasks:

- Implementation of monitors

- A package that consists of a collection of procedures, variables and data structures

- Compiler implements the mutual exclusion on monitor entries

- Performance of semaphores

- Monitors

- Definition: a monitor is an abstract data type that provides a high-level form of process synchronization

Thread.yield(): if a thread calls this method, it voluntarily relinquishes the CPU- If there are threads with equal or higher priorities, then the thread changes its state from running to ready and gives up the CPU

- If there are no threads with equal or higher priorities, then the thread continues to run

- Bounded buffer problem revisited

- We can replace the mutex and spin lock solution with

Thread.yield() - Since

Thread.yield()does not ensure mutual exclusion, we can change the insert and remove method tosynchronized - Such synchronized insert and remove methods can result in deadlocks

- We can replace the mutex and spin lock solution with

- Synchronized methods

- All objects in Java have an associated lock

- Calling synchronized method requires owning the lock, if the calling thread does not own the lock, it is placed in the entry set for the object's lock, the lock is released when the active thread exits the synchronized method

- Condition variables

wait()andsignal()

- Java thread methods

t.wait(): t release the object's lock, change the state of t to blocked, t is placed in the wait sett.nofity(): select a thread t' from the wait list of the object's lock, move t' to the entry set, change the state of t' to ready

Lecture 17 2022/03/24

- Deadlock

- Definition: deadlock occurs in a set of processes when every process in the set is blocked and waiting for an even that can only be caused by another process in the set

- Four necessary but not sufficient conditions of deadlock

- Mutual exclusion: only one process can use a resource at a time

- Hold & wait: process holding more than one resources is waiting to acquire others hold by other processes

- No preemption: resources only released by processes voluntarily when they finish using the resources

- Circular wait: there exists a set of waiting processes,

- An deadlock example

1

2

3

4

5

6

7

8

9Semaphore m1 = new Semaphore(1); // binary semaphore m1

Semaphore m2 = new Semaphore(1); // binary semaphore m2

// Thread A

m1.acquire(); // Context switch after this line to Thread B

m2.acquire();

// Thread B

m2.acquire();

m1.acquire(); - Starvation is different from deadlock

- For a deadlock: all threads are suspended

- For a starvation: only part of threads are suspended (for example, threads with lower priorities in the priority scheduling policy)

- Deadlock problem descriptions

- Models

- Resource types:

- Each resource type

- Each process requests a resource (wait until the resource is granted), use the resource, and release the resource

- System table records if a resource is free or not, process it allocated, and queue for processes waiting for each resource

- Resource types:

- Models

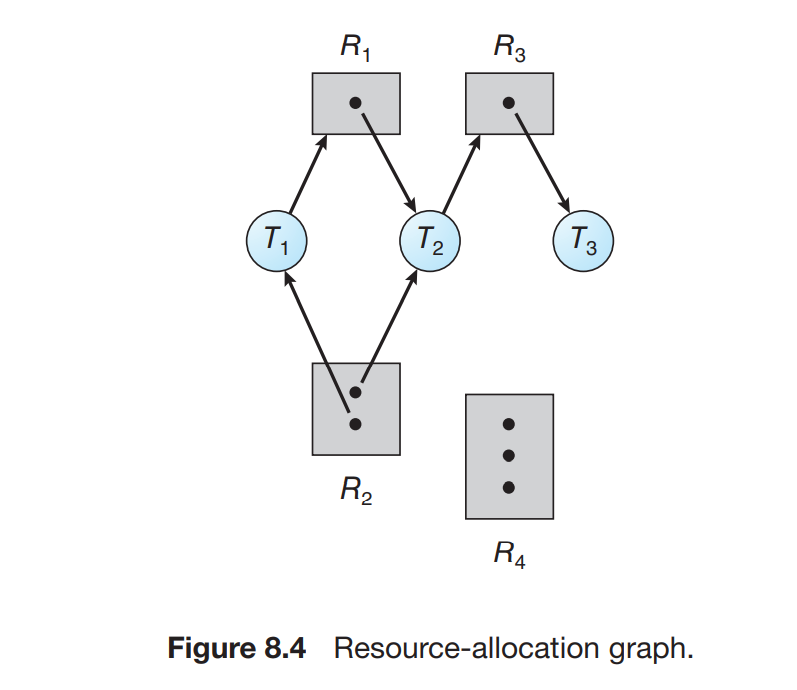

- Resource allocation graph

- V: set of vertices

- P =

- R =

- P =

- E: set of edges

- Request edge:

- Assignment edge:

- Request edge:

- Some interpretations

- No cycle, then no deadlock

- Cycle and n instances per resource type, then possible deadlock

- Cycle and one instance per resource type, then deadlock for sure

- Processes that are not in a cycle can also be in deadlock

- V: set of vertices

- Dealing with deadlock

- Prevention: breaking one of the three conditions with

deadlock(mutual exclusion cannot be broken), so deadlocks will not occur

- Break hold & wait: before execution, processes try to acquire all resources they need, if they cannot do so, they are allocated no resources

- Break preemption: if a process holds some resources and requires more but cannot get them, then the process releases all resources it currently holds

- Break circular wait: processes must request resources in an increasing order

- Prevention: breaking one of the three conditions with

deadlock(mutual exclusion cannot be broken), so deadlocks will not occur

Lecture 18 2022/03/29

- Deadlock Avoidance

- Does not break the necessary conditions for deadlock, but monitor the system, and if a request will lead the system to unsafe state, such request is rejected

- System state

- In a safe state, there is no possibility of deadlock, we can find a safe sequence to grant the requests in a safe state

- In an unsafe state, there is possibility of deadlock

- Avoidance: never enter an unsafe state

- Banker's algorithm: deadlock avoidance

- Terminology

Available: array with length m, available resources for each resource typeMax: n * m matrix, process i request max[i][j] instances of resource jAllocation: n * m matrix, process i is allocated allocation[i][j] instances of resource jNeed: n * m matrix, need[i][j] = max[i][j] - allocation[i][j]

- Something to remember

- Available can be calculated from total available resources and allocated resources

- If a request is granted, then the allocated resources should be added to available resources

- Check if a request should be granted: add the request to allocation, if we can find a safe sequence, then the result is a safe state, so the request should be granted, but if somewhere in the middle we cannot grant any more process for execution, the the system enters an unsafe state, so the request should not be granted

- Terminology

- Deadlock detection + recovery

- Idea: allow system to enter deadlocked states, periodically run deadlock detection algorithm. If deadlock is detected, then run recovery scheme to break the deadlock

- Detection situation 1: each resource type has one instance

- Idea: we just need to check if there is a cycle in the resource allocation graph

- Algorithm: use DFS to check if a directed graph has a backward edge, then the system is in a deadlocked state, the time complexity is O(V + E)

Lecture 19 2022/03/31

- Deadlock detection + recovery (ctd)

- Detection situation 2: each resource types have multiple instances,

we use a detection algorithm that is similar to the banker's algorithm

- If some requests can be granted, then they are not deadlocked.

- The requests that cannot be granted are deadlocked

- Time complexity of the deadlock detection algorithm:

- The deadlock detection algorithm is expensive, therefore we do not run this deadlock detection algorithm after resource allocation. The frequency of the detection algorithm depends on how often a deadlock is likely to occur and how many processes will need to be rolled back

- Deadlock recovery

- Recovery approach 1: process termination

- Kill all deadlocked processes

- Kill one deadlocked process at a time until the deadlock cycle is eliminated (select the process to be killed based on priority, completion percentage, resources held, resources requested, etc.)

- Recovery approach 2: preempt resources from deadlocked processes

- Preemption cost should be minimal

- If a process is preempted, it should be rolled back to a previous safe state and restart from that state

- We need to ensure wo do not always preempt resources from the same process, which can avoid starvation of that process

- Recovery approach 1: process termination

- Detection situation 2: each resource types have multiple instances,

we use a detection algorithm that is similar to the banker's algorithm

- The Ostrich method

- As the deadlock can be very infrequently and the cost of handling can be very high, the OS can just pretend there are no deadlocks

- Most OSs use this method and let the programmers to handle deadlocks

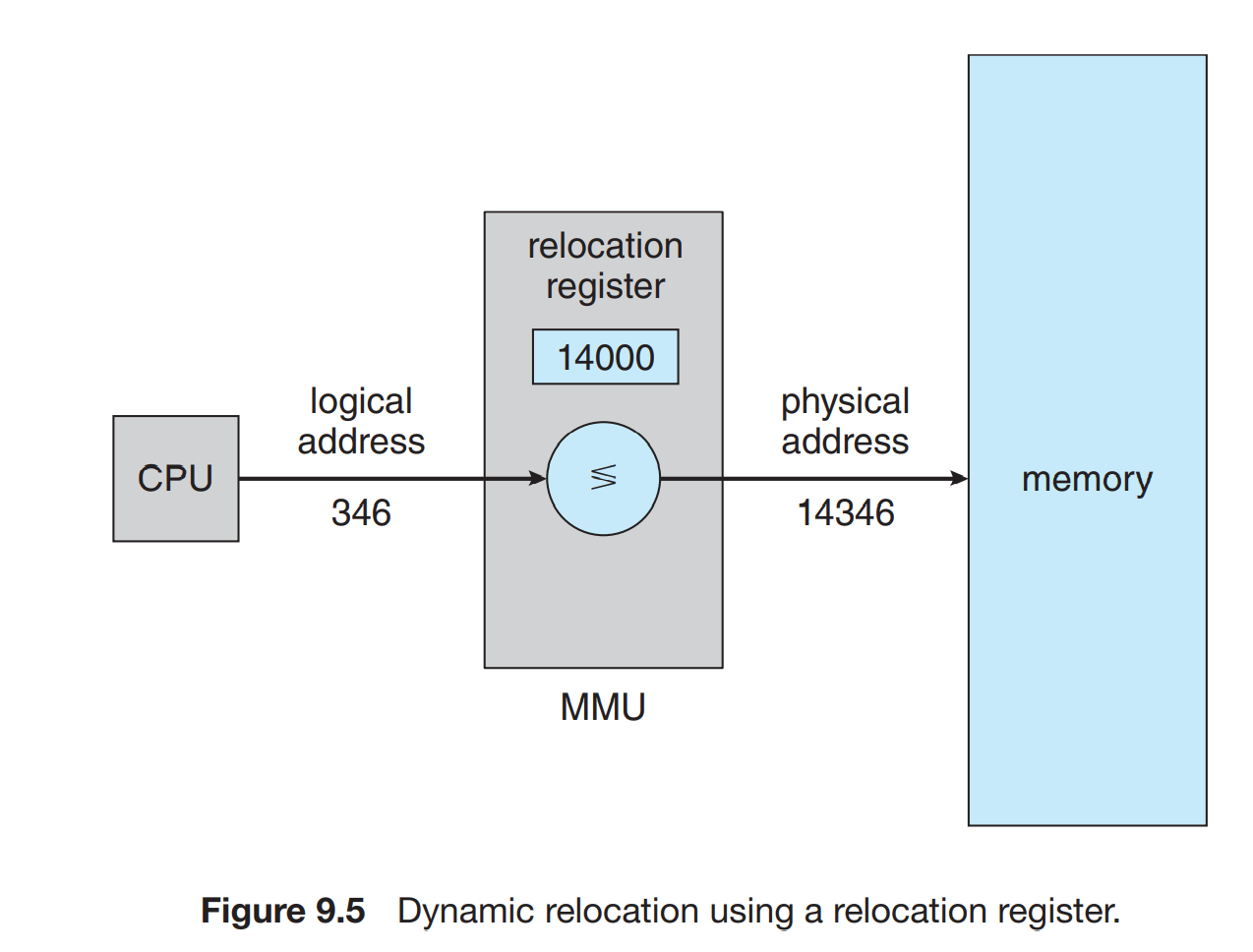

- Memory Manager

- Memory manager is another resource manager component of the OS

- Responsibilities

- Memory allocation: responsible of allocating memory to processes

- Address mapping

- Address mapping allows to run the same code in different physical memory in different processes, and to support process address space abstraction

- Memory management unit (MMU) is responsible for mapping logical addresses generated by CPU instructions to physical addresses seen by memory controller

- MMU is usually on the same chip as the CPU

- Address mapping

- Logical addresses

- Generated by CPU instructions, is an abstraction of memory

- Logical addresses start at 0, and are contiguous

- Generating logical addresses

- At compile time

- At link/load time

- At execution time

- Logical address space is defined by base and limit registers. If the address is not in the range, there are traps to the OS

- Logical addresses

- Memory allocation

- Memory allocation goals

- High memory utilization: ensure as much memory is used as possible

- High concurrency: support as may processes as possible

- Memory allocation should be fast

- Memory allocation strategies

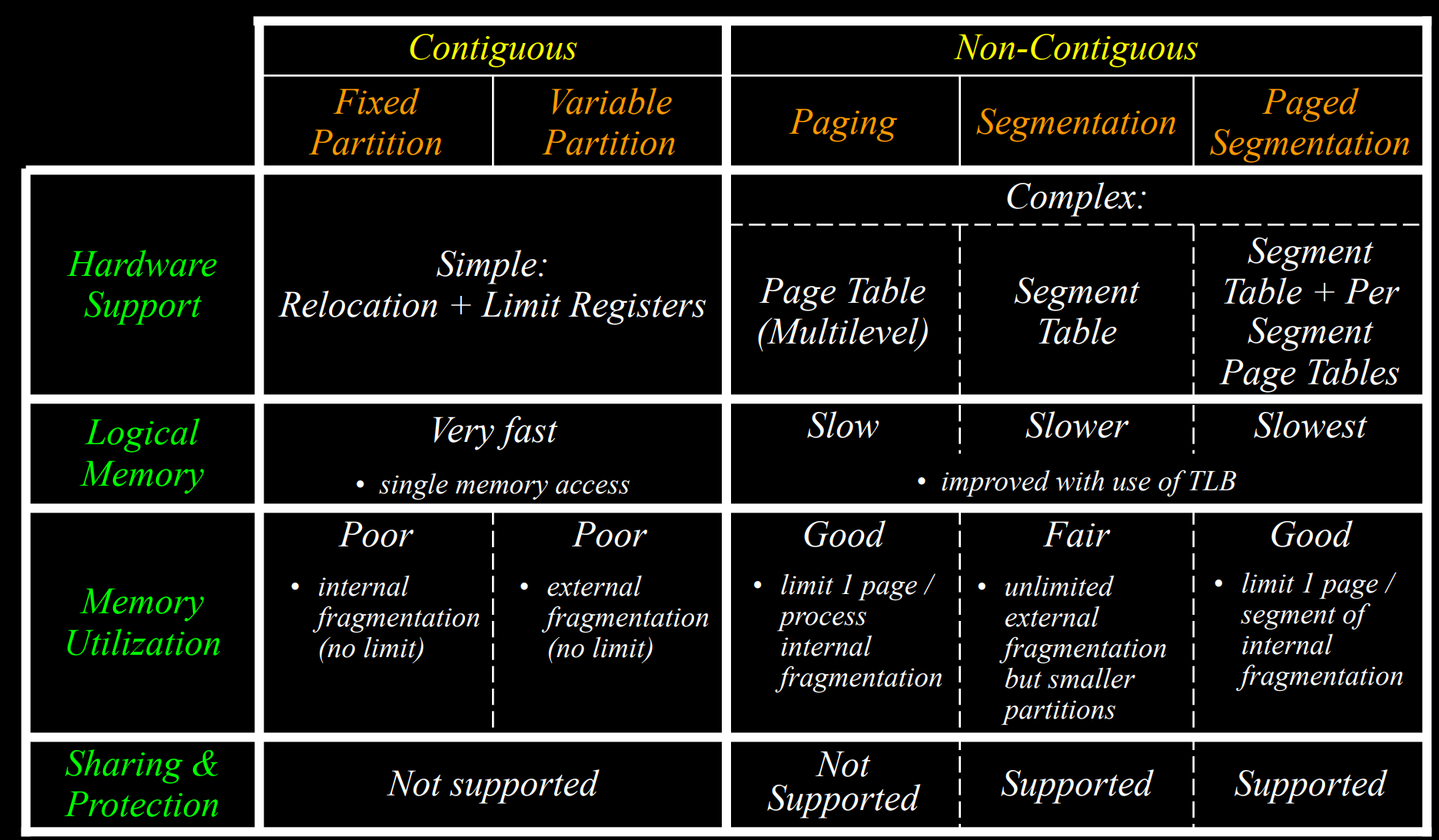

- Contiguous memory allocation

- Fixed partition allocation

- Idea: divide the physical address space into fixed partitions and allocate processes into the partitions. One partition can hold at most one process

- Implementation: requires the support of relocation (base) and limit register

- Issues:

- Internal fragmentation: when a process is allocated to a partition and parts of the partition is not used, such part is called the internal fragmentation

- We need to know in advance the memory needs of processes

- The memory needs of processes should not change during their lifetime

- Fixed partition allocation

- Contiguous memory allocation

- Memory allocation goals

Lecture 20 2022/04/05

- Memory allocation (ctd)

- Memory allocation strategies (ctd)

- Contiguous memory allocation (ctd)

- Variable partition allocation

- Idea: physical memory is not divide into fixed partitions, but has a set of holes from which memory can be assigned

- Allocation policies

- Best fit: choose the smallest feasible hole

- Worst fit: choose the largest feasible hole

- First fit: choose the first feasible hole (do not need to search the list)

- Next fit: choose the next feasible hole (do not need to search the list, do not need to start from the beginning)

- Issues:

- External fragmentation: the size of a hole may be larger than the needs of a process, the difference is called the external fragmentation

- External fragmentation can be periodically eliminated via compaction

- Comments

- Contiguous memory allocation is easy to implement and conceptualize

- Contiguous memory allocations is faster than non-contiguous memory allocation

- The memory utilization is poor

- Used by some early batch systems

- Variable partition allocation

- Non-contiguous memory allocation

- Idea: logical address space is partitioned, each partition is mapped to a contiguous chunk of physical memory

- Approach 1: paging, all chunks are the same size, chunks are physically determined

- Approach 2: segmentation, chunks are variably sized, chunks are logically determined

- Approach 3: paged segmentation, combination of paging and segmentation

- Comments:

- Better memory utilization: by dividing address space into smaller chunks, more memory fragments can be used

- More complex implementation

- The standard memory allocation system used by all OS today

- Contiguous memory allocation (ctd)

- Memory allocation strategies (ctd)

- Paging

- Basic idea

- Divide physical memory into fixed-size blocks called frames

- Divide logical memory into same-sized blocks called pages

- Within a page, memory is contiguous, but pages need not to be contiguous or in order

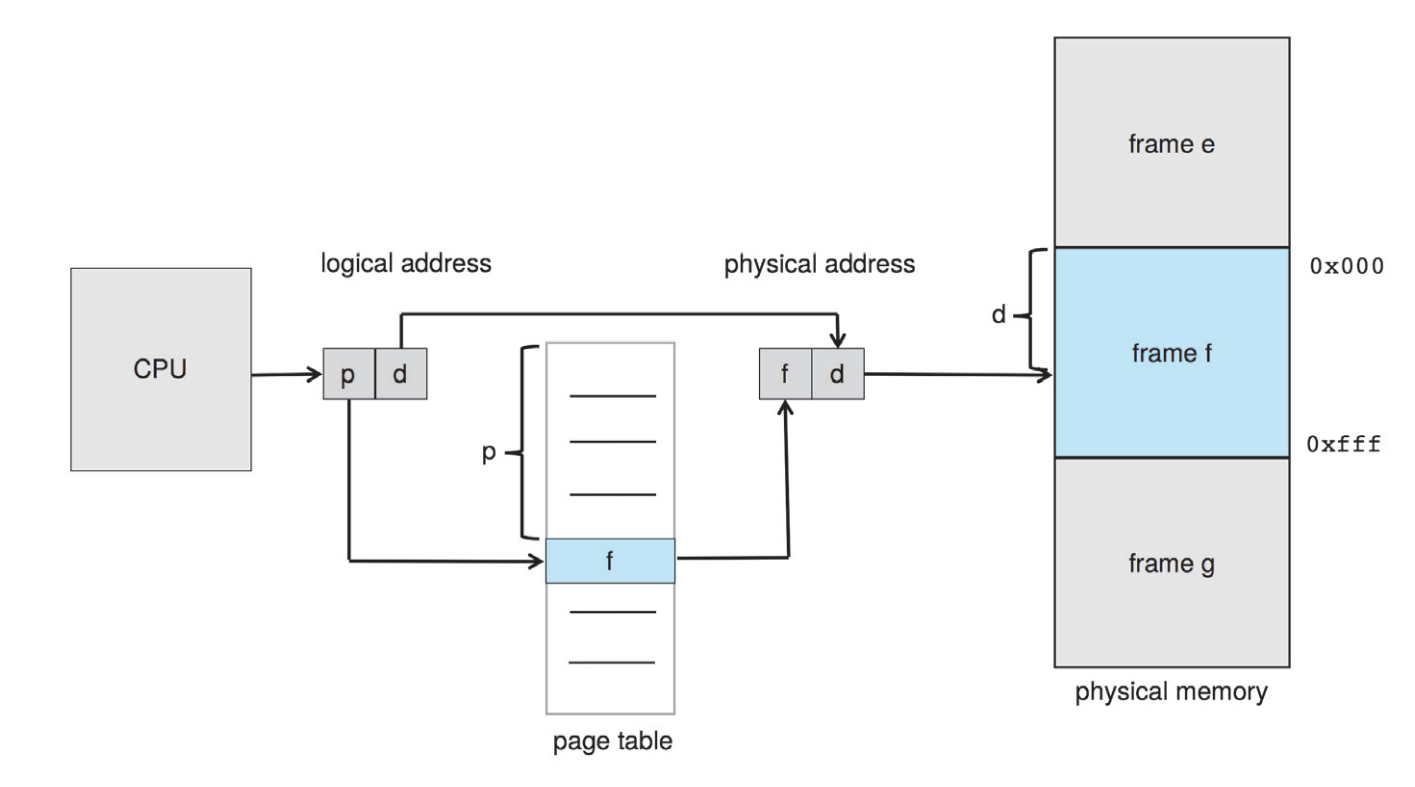

- Address translation

- Logical address = (p, d), physical address = (f, d)

- p: page number (index into page table which contains base address of corresponding frame f)

- d: page offset: added to base address to find location within page/frame

- Logical and physical addresses are binary, so the translation is actually in binary form

- Logical address = (p, d), physical address = (f, d)

- Basic idea

Lecture 21 2022/04/07

- Paging (ctd)

- Page table implementation

- Page table is kept in the main memory

- Each process use process special status registers:

- Page table base register (PTBR): location of page table for process

- Page table limit register(PTLR): size of process page table

- Issues with paging

- Two memory access problem: use TLB

- Page table is too large:

- Use two level page table

- Use inverted page table

- Use hashed page table

- Improve memory access with TLB

- The implementation of page table requires 2 memory accesses for each

operation

- Look up the frame location for this process in page table

- Loop up the offset in the frame to find the location of the data

- Use translation loop-aside buffer to solve the two memory access

problem

- TLB is a fast lookup hardware cache containing some page table entries

- Why TLB is fast

- TLB is cache, and cache is faster than memory

- Associative memory permits parallel search

- When doing to page table lookup, first look in the cache TLB, if no in cache (called TLB miss), look in memory page table

- Effective access time (EAT) with TLB

- If TLB misses, then the performance is even worse than the page table lookup with two memory accesses (additional access in TLB)

- Assumption

- Each memory access takes time of 1

- Each TLB access takes

- TLB hit ratio

- EAT =

- If the hit ratio

- If there is a TLB miss, then we need to update the TLB entries

- The implementation of page table requires 2 memory accesses for each

operation

- Two level page table

- Page table is stored contiguously in the physical memory

- In 32-bit virtual memory, 12 bits are used for offset, 20 bits are used for page table, page table entry size is 4 bytes, then page table size is 4 * 2 ^ (32 - 12) = 4MB

- In 32-bit virtual memory, 12 bits are used for offset, 10 bits are used for outer (primary) page table, 10 bits are used for inner (secondary) page table, page table entry size is 4 bytes, then outer page table size is 4 * 2 ^ 10 = 4KB, inner page table size is 4 * 2 ^ 10 = 4KB, there is one outer page table and 2 ^ 10 inner page tables

- In the above two level page table, one virtual address translation involves the outer page table and one inner page tables

- Inverted page table

- For ordinary page table, each process has a page table

- For inverted page table, there is only one page table for all processes

- Comments

- Reduced memory space

- Longer lookup time

- Difficult shared memory implementation

- Hashed page table

- Page number is hashed to hashed page table

- Page table implementation

Lecture 22 2022/04/14

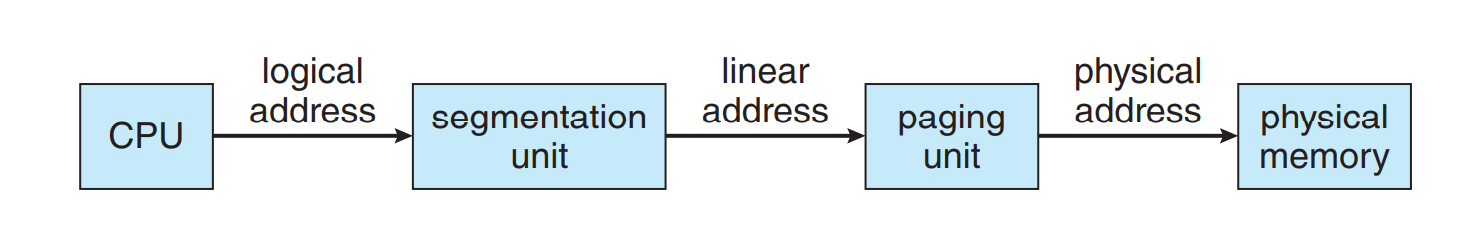

- Segmentation

- Idea: similar to paging, but partitions of logical address space/physical memory are variable, not fixed. Partition the logical address into text segment, stack segment, etc. Each segment itself is continuous, but the page table of a process as a whole is not.

- Architecture

- Logical addresses = (s, d)

- One process has one segment table

- Each process PCB holds segment table base register (STBR) and segment table length register(STLR). If a logical address is invalid, trap to the OS

- Issues with segmentation

- Two memory accesses problem: use TLB

- Segment table is too large: use paged segmentation: segmentation based allocation, but each segment is paged

- Comments on segmentation

- Easier to implement protection: text segment should be read-only, other segments may be non-executable. Page in the paging scheme can contain both code and data

- Easier to implement sharing: same text segment can be used by multiple processes concurrently

- Summary

- Virtual memory

- Motivation: increase the degree of multiprogramming in a running system

- Means

- High memory utilization, flexible memory allocation: allow allocated memory to be non-contiguous

- Swapping and virtual memory: allow some process to have address space dynamically mapped to physical memory

- Swapping

- Idea: ready or blocked processes have no physical memory allocation, instead, their address spaces are mapped to disk

- Processes can be swapped out to disk and swapped into memory dynamically

- Comments:

- Multiprogramming is limited not to memory but disk

- Easy to implement

- Expensive: involves a lot of disk I/O

- Time variable: depends on process size

- Virtual memory

- Idea: ready, blocked, or running process have some (but not all) of their allocation of physical memory

- Implementation: demand paging/demand segmentation

- Demand paging

- Idea: bring a page into memory only when it's referenced (read or written)

- Implementation

- Add valid/invalid bit to each page table entry: 1 is valid, which means the page is in memory, 0 is invalid, which means the page is not in memory

- Page mapping algorithm

- Logical address = (p, d)

- Search p in page table, if valid, convert p to f and get physical address = (f, d). If invalid, trap to page fault routine in OS to bring p into a free frame and update page table, then restart translation (during page fault, control is passed to OS, and when the handling process is done, control is returned to the process, the process does not know where to start from during execution, and it cannot start in the middle of an instruction, so it needs to restart)

- Submit memory request with (f, d)

- Pre-paging: load working set pages into memory before the process starts

Lecture 23 2022/04/26

- Virtual memory

- Page replacement

- If there is no free frame, then we need to replace a existing page in the memory using replacement algorithm

- Page replacement algorithms

- Goal: minimize number of page faults

- If page fault rate is

- Algorithms

- FIFO:

- Replace pages that has the oldest time when it was brought into the memory is replaced

- Belady' anomaly: when the number of allocated frames increases, page fault rate increases

- Optimal: replace the page that will not be used for the longest period time

- LRU: replace the page that hasn't been used for the longest period time

- NRU: not recently used

- LFU: replace page referenced fewest times

- FIFO:

- Frame allocation

- Idea: we have one set of frames but multiple processes, the problem is how to allocate the processes

- Goal:

- Minimize page fault rates

- Avoid any process thrashing

- Allocation policy

- Equitable: each process get even share of frames